In Part 1 we talked about the reasons the authors felt some of the existing research was not credible. They wrapped up their critique of the Kanin study with the following quote (and if you read Part 1, you can probably guess who they are going to cite):

As a result of these and other serious problems with the “research,” Kanin’s (1994) article can be considered “a provocative opinion piece, but it is not a scientific study of the issue of false reporting of rape. It certainly should never be used to assert a scientific foundation for the frequency of false allegations” (Lisak, 2007, p. 1).

In contrast, when more methodologically rigorous research has been conducted, estimates for the percentage of false reports begin to converge around 2-8%.

Before I get into the primary study that they reference, let me give you an idea of just what kind of games they have in store for us. Here is what they say about the second study used to back up their 2-8% range:

For example, Clark and Lewis (1977) examined case files for all 116 rapes investigated by the Toronto Metropolitan Police Department in 1970. As a result, they concluded that seven cases (6%) involved false reports made by victims.

Ok, so we are back to using data from a single police department, in a single year, and in this case from about 40 years prior to when the article was written, but at least it is in the range they are claiming. They then go on to say this

There were also five other reports made by someone other than the victim that were deemed by these researchers to be false (e.g., a relative or boyfriend).

So, to clarify, the study found that 7+5 = 12 out of 116 reported rapes were false. For most people that would be a false reporting rate of 10.3%, which is outside the 2-8% range 1, but apparently not for the authors. It would seem that rape reports made by someone other than the victim do not count as false despite being, well, false2 3. At this point all I can say is that at least they are quoting the research of others – if this is the kind of logic they are using, I can only imagine what the results would be if they got to design the study themselves.

Back to their other sources – what is the primary study that they cite?

For example, in a multi-site study of eight U.S. communities involved in the “Making a Difference” (or “MAD”) Project, data were collected by law enforcement agencies for all sexual assault reports received in an 18- 24 month period. Of the 2,059 cases that were included in the study, 140 (7%) were classified as false.

A multi-site, multi-year study? Seems like we actually have some good data to work with here. They then give a website where you can find out more information about the MAD Project, http://www.evawintl.org. Clicking through you’ll find out that their primary source for a “methodologically rigorous” study on the number of false rape reports comes from the organization End Violence Against Women International. If someone tried to present their view that the number of people killed by cigarettes each year is actually pretty low and their primary source was a study done by Phillip Morris, how much credence would you lend that research? What about a study on gun violence done by the NRA? And yet, for some unknown reason, the authors don’t seem to think that End Violence Against Women International might in some way be biased as to frequency of false rape reports.

As it turns out, there is actually a very good reason why the authors don’t see a problem with that, which they are pretty upfront about – and by “are pretty upfront about” I mean “bury it in the footnotes.”4

1 Dr. Lonsway is the Research Direcotr [sic] of EVAW International; Sgt. Archambault is the Executive Director of EVAW Internatiaonal;… This article is an adapted excerpt from the training module of the same name in the On-Line Training institute hosted by End Violence Against Women (EVAW) International, at http://www.evawintl.org/evaw_courseware.

So not only do they try to disguise the fact that the main source for their hypothesis is a study that two of the authors conducted themselves for an organization with a clear stake in the matter, but the entire article is essentially an advertisement for their training sessions. Think I’m exaggerating? Check out the last page of article:

(click to enlarge)

Perhaps I have not been keeping up with the times, but I hadn’t realized that scholarly articles came with coupons now. And we are supposed to believe that Kanin’s study is the “provocative opinion piece”?

So how did they get to the 7% number? The first problem we are going to run into has to do with the research itself. Take a look at EVAW’s page on the MAD Project. Despite the data collection period being from 2005-2006, there is very little in the way of actual published research. They have a link to research materials where you can see some of the data collection forms they used, a pdf on the research methods, and a collection of slides listing some of the basic data results, but that is pretty much it. That basic data results document is over 5 years old at this point, it has been over 8 years since the data collection periods, and there is still no comprehensive study document available, which strikes me as a little strange.

One of the key questions in evaluating their claim is going to be “How, exactly, is a false report defined?” As it turns out, that part isn’t entirely clear cut. In fact, it isn’t until paragraph 6 of the section entitled “What is the Actual Definition of a False Report?” that we get to this:

Rather, investigators and prosecutors must base all final judgments of a sexual assault report on the findings from a thorough, evidence-based investigation. The determination that a report is false can then only be made when there is sufficient evidence to establish that the sexual assault did not happen (was not completed or attempted.)

OK, so not a terribly unreasonable definition, but to understand how this has the potential to skew the data, we have to go back to the research methods I mentioned before. If you read through it, you’ll find that the 8 sites were not chosen randomly. Communities had to apply to be in the study and there were 88 US applications in total. These applications were then evaluated by a selection committee to select the final 8 cities5 6. After selection, the 8 communities came together for a 3 days training conference, and that is where I think things get particularly interesting. While they don’t specify the exact training that occured, we know that this article is adapted from some of their training materials. So what kind of things does their training have to say about false rape reports?

To start off with, here are some things that someone filing a rape report might do, which the authors say happens for perfectly justifiable reasons

- “alter or exaggerate the details of what happened is to create a case that seems more believable”

- “report that the suspect used a weapon when this is not really true”

- “describe threats of physical violence that were not really made”

And if that happens?

When we think about these dynamics, it makes sense why victims might provide inconsistent, incomplete, or even untrue statements. Yet many investigators and prosecutors have seen this as evidence of a “false report.” In fact, none of these situations meets the actual criteria for a false report

Um, OK. Doesn’t that create credibility problems for the person making the report though? I mean, if there are valid reasons why a person might report things that didn’t happen, how can we believe the rest of what they report is accurate?

For all of the reasons provided above, it is understandable that victims often give information in their statement that is incomplete, inconsistent or even untrue. Nonetheless, these issues can destroy the victim’s credibility if they are not handled by criminal justice professionals.

“handled”? Uh oh, that sounds kind of ominous. At first the authors just describe exploring issues “gently and nonjudgementally.” A little later on though, they get to this suggestion:

Problems such as inconsistent statements from the victim can also be decreased by reducing the number of unnecessary professional contacts

Are they suggesting that to prevent credibility problems that result when important elements of the accuser’s story turn out to be false, they should make sure they aren’t asked what happened too many times?

This does not mean that investigators and prosecutors should be reluctant to conduct follow-up interviews during the course of the investigation, as additional evidence and information is uncovered. In fact, such follow-up interviews are necessary to conduct a comprehensive investigation.

Phew, I was worried for a second there.

The purpose of any follow-up interviews should therefore be to gather additional information and clarify any questions, not to go over the same information again.

So ask them as many questions as you want, just not about anything you’ve already asked them lest they give a different answer. Gotcha.

However, as important as it is to seek clarification of such inconsistencies or omissions, it is equally important to highlight the accuracy of other details in the victim’s statement. Thus, a primary goal of any sexual assault investigation will be seeking corroboration for details in the victim’s account of events, regardless of whether or not they are relevant for establishing an element of the offense.

She said there was a gun when there wasn’t. She also said he threatened her with violence when he didn’t. Buuuuuuut she said she had a salad for dinner last night and we found a receipt for it, so story checks out. Book ’em.

OK, but what if after all of the gentleness, and not asking the same question more than once, and not getting too worked up about any key elements that turn out to be false, what if after all that the police still feel like something isn’t quite right?

As McDowell and Hibler (1987) describe, any effort to challenge the validity of a sexual assault report could be devastating if the suspicion is misplaced and the victim really was assaulted. Such a challenge would certainly destroy the trusting relationship that must develop between criminal justice professionals and victims for successful investigation and prosecution.

and

Given the size of the caseload that most investigators and prosecutors handle, it seems difficult to justify the inordinate time that would be involved in investigating and prosecuting someone for filing a false report—given that it is typically only a misdemeanor offense.

and

While it is understandable that investigators might want to prove that the report is false out of a sense of frustration and a determination to get to the truth, this is probably not the best use of limited resources.

and

It is also important to keep in mind that most false reports of sexual assault are typically the result of personal and emotional problems, rather than vengeful motives.

I have to detour again here. There is no source cited for that last statement. In the Kanin study from Part 1 of this series, there was an analysis of the motives behind false reporting, but the authors dislike that study so much they pretend it doesn’t exist. You may think I’m being hyperbolic here, but after they stated the results from the MAD study they said the following:

To date, the MAD study is the only research conducted in the U.S.7 to evaluate the percentage of false reports made to law enforcement.

See? The Kanin study doesn’t exist anymore. This ends up not being a trivial point either, because journalists aren’t always the most thorough. In a fact checking column on the topic of rape statistics, the Washington Post took them at their word that the MAD study was the only one done in the US.

The “Making a Difference” Project, which used data collected by law enforcement agencies over 18 to 24 months, found 7 percent of cases that were classified as false. That study is the “only research conducted in the U.S. to evaluate the percentage of false reports made to law enforcement,” according to the National Center for the Prosecution of Violence Against Women.

Detour over, back to my point.

Do you see the situation they are setting up here?

- A rape report can only be classified as false after a thorough fact-based investigation (a confession that the report was false is not enough)

- Don’t ask the same question twice so they can’t change their story

- Even if you find key parts of their story to be false, don’t pay much attention to it

- Challenging the validity of their report could be devastating

- Even if you think the report is false, is it really worth your time to prove that it is?

If you need to do a thorough investigation to classify a report as false, but you can’t ask too many questions, you can’t challenge the validity of their story, and you probably shouldn’t be wasting time trying to investigate a false report, how can a report every be classified as false? While the authors present this 7% false report rate as very low, I’m kind of shocked they were able to classify that many using these procedures.

Finally, we get to what I think is the most deceptive part of their false report statistics. When they say that only 2-8% of rape reports are false, the implication is that the rest are true. Based on the ways their source data was constructed though, “2-8% of rape reports are false” would only be an accurate statement if you include the addendum “at bare minimum.” 2-8% isn’t actually a ceiling, or even a range, it is merely a floor.

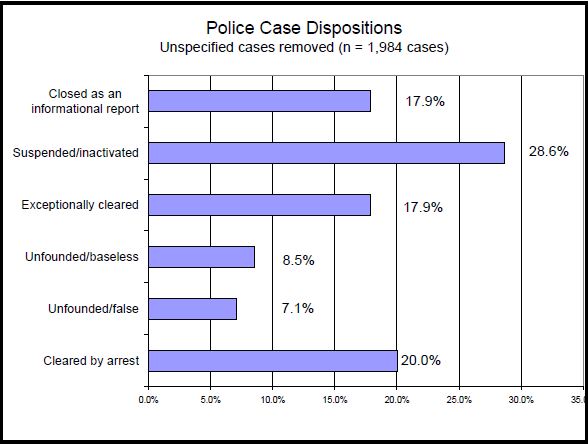

Let’s take a look at what the MAD study actually found. On page 9 of the basic results we find the following chart:

Unfounded/false is the 7.1% we’ve already dealt with, but what is Unfounded/baseless? EVAW actually has a helpful document on that. It includes the following description of baseless:

Cases determined to be baseless include those that do not meet the elements of the offense and those that were improperly coded as a sexual assault in the first place.

They even give an example

an adult woman is coerced into having sex when her boyfriend who said he would break up with her if she doesn’t do what he asked.

See? The report is unfounded but it isn’t Unfounded/false, so it doesn’t count. What about Closed as an informational report? If you looked at the Disposition section of the police tracking form you’ll see a fuller description

Closed as an informational report (elements of a sexual assault offense not met)

Once again, there wasn’t actually a rape, but the report doesn’t get counted as false. Those last two categories combined make up 26.4% of the total rape reports. Isn’t manipulating statistics fun?

Suspended/inactivated are the cases that there isn’t enough evidence for an arrest and make up another 28.6% of the cases.

Exceptionally cleared is a bit trickier. EVAW has this document which goes into a bit more detail about clearance methods, and the exceptional clearance section starts on page 5. It is supposed to be for when the police have everything they need to make an arrest, but for some reason out of their control, they cannot (for example, the suspect dies or is arrested in another jurisdiction). As they put it in the article.

It is therefore clear that the purpose of exceptional clearance is for police agencies to “count” cases as cleared when they have done their job, but they were prevented by some outside factor from moving forward with an arrest and prosecution. As described by the FBI, exceptional clearance offers a way for police agencies to clear offenses once “they have exhausted all leads and have done everything possible in order to clear a case” (UCR Handbook, p. 80).

How it is used in practice?

It is clear that this is how the definition was interpreted by personnel in the two police agencies involved in the research; the most common reason given for exceptional clearance was “a prosecutorial declination to file charges because of insufficient evidence” (Spohn & Tellis, 2011, p. 125). Comments from expert reviewers also suggested that this practice is in place in other agencies as well.

This isn’t particularly surprising. There are only so many ways police can dispose of a case. If they can’t make an arrest, and they can’t easily put it as false, baseless, or informational report, the only choices left are suspended/inactive or exceptionally cleared. If you were a police officer, a profession where one of the primary performance metrics is clearance rate, would you say “Hey, we did our job and found the guy, and we’d arrest him for it too, but the darn prosecutor just wont let us” and clear it, or would you put it in suspended/inactive?

Finally, we get to arrested, which makes up a mere 20%.

The best way to demonstrate how misleading this methodology is, is to use their data and hyper-conservative classifications to answer a different question: What percentage of rape reports are true?

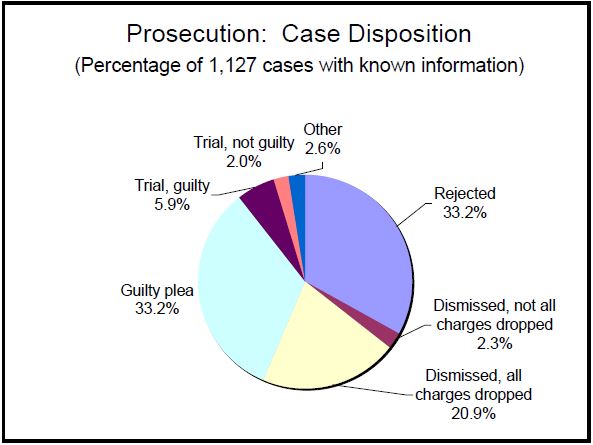

The 20% of reports that ended in arrest is a good starting point, but being arrested for a crime is hardly solid proof that you actually committed it. Luckily, there is another chart in the MAD results that can help us out. On page 12 we find the breakdown of Prosecution dispositions. They don’t provide enough information for us to know how many of the cases in the police disposition results are in the prosecution disposition results, but we can use them to make some simplifying assumptions.

From this we can see can see that 39.1% of the cases end in either a guilty verdict or a guilty plea. Multiplying that by the 20% of police cases that result in arrest, we are left with the 7.8% I used at the start of my first post. Even this isn’t a good number to use though. If a confession that a report is false isn’t enough to classify the report as false, then the corollary is that a guilty plea isn’t enough to classify a case as true. After all, it would not be difficut to imagine a scenario where, for any number of reasons, someone pleads guilty to a crime that they didn’t commit. 20% x 5.9% leaves us with a “true” rate of just 1.2%. Even if we decide to be generous and include not just the 20% arrested, but also the 17.9% exceptionally cleared, the number still only goes up to 2.2%

At the end of the day, my point is this – If someone tries to advocate a particular policy based on the fact that “only 2-8% of rape reports are false” an appropriate response might be “Sure, but only 1-8% are true.”

I’ll close with one of the quotes from the article I agree with, and I think it sums up my thoughts on the topic pretty well

Of course, in reality, no one knows—and in fact no one can possibly know—exactly how many sexual assault reports are false.

- While an extra 2.3% might not mean much in absolute terms, it does represent between 29%-115% of the the values claimed to be accurate ↩

- I am left to wonder whether or not those five people accused of rape and investigated by the police take comfort in the fact that the false rape reports filed against them weren’t actually false. ↩

- On a positive note, at least they didn’t try to hide it in a footnote this time ↩

- OK, so this one they tried to hide in a footnote ↩

- Austin, TX; Bozeman, MT; Columbia, SC; Grand Rapids, MI; Jacksonville, FL; San Diego, CA; Kansas City, MO; and Washington, DC ↩

- A brief side note here, while these 8 cities are decently spread out across the country (I’ve created a map here), there are large parts of the country that are not covered (nothing in the Northeast for example). This brings into question how representative their findings are for the country at large, but I’m not going to go deeper into this as I have greater concerns with other aspects. ↩

- Oh, did I not mention that of all the studies they cite to justify the 2-8% range, only one of them actually examines false reports in the US? ↩

You’ve done amazing work here, kudos. I look forward to future posts.

Appreciate the kudos. I’m hopeful that the reason you liked the posts is because you enjoy the analysis of misleading statistics in general. If, however, your praise is due to the fact that my first subject was a study often used by those of a particular ideological persuasion, you may find yourself disappointed in the future content here. Put more bluntly – if you think this is going to turn into some sort of “men’s rights” blog based on the first topic I chose, you are sorely mistaken.

Your comment here reads as unduly defensive, and I think that’s unfortunate. You’ve done great work irrespective of the topic considered. You’ve every right to be as preemptive as you wish when addressing comments in your blog, but in this particular case, the blunt caution struck me as out of proportion with what was actually said to you (e.g., apolitically-worded praise). But then you probably have access to analytics data, and it’s entirely possible that you’ve determined that the person you just replied to arrived here via a hub like /r/mensrights, and have tailored your response accordingly. For instance, I arrived here after finding your link posted there. So I’m not attempting to make you sound superficially unreasonable.

So, to address the issue of men’s rights: it’s true that there are a number of men’s rights advocates or pro-men’s rights people (men and women alike) who will find this information useful or interesting, but that’s a contextual matter because the issue of false rape accusations is one which is commonly understood to disproportionately affect men. Hence the interest. If the work is solid and if it is cited responsibly such that you aren’t taken out of context, this shouldn’t matter. If that does happen, I’ll be right there with you, condemning any and all attempts to misrepresent or politically weaponize your work.

So, although I cannot really unpack the implications of your comment, please wary of painting a group with too broad a brush. Alternately, at the very least, I encourage you to consider why you might be making this warning to blighter in the first place. The words “you are sorely mistaken” are somewhat heavy, given the neutrally-worded praise.

My perspective is that by virtue of having tackled this topic, you’ve potentially opened yourself to a barrage of potential criticism by those with a vested interest in maintaining the status quo on the matter (e.g., the widespread misapprehension about the stats you’ve analyzed here). That won’t necessarily happen, and I hope it doesn’t, but if it does, please take a moment to consider that this wouldn’t be the “fault” of the people praising your work so much as it would be the “fault” of those upset with your revelations. The perspective I’m offering isn’t borne out of malice toward any one group of people, mind you. I’ve just seen it happen before, and I’ve experienced it myself.

Anecdote: I’ve had a great deal of difficulty discussing rape statistics with friends who subscribe to specific identity politics, probably because there is no easily neutral ground to stand on in contemporary social discourse on the matter, and any assumption made by my conversational partners as to my agenda (despite my adherence to the apolitical requirements of objective discourse) is enough for them to disengage entirely, or, at worst, condemn and vilify me as a monster holding positions I’ve never claimed for myself, or evidenced otherwise. Again, this is a personal story being enlarged for the purposes of explication, but it’s one which I’ve heard recounted numerous times on the /r/mensrights subreddit (and elsewhere).

For full disclosure, I’m a liberal, socially anti-authoritarian, pro-men’s rights, pro-women’s rights, egalitarian, humanist, democratic socialist. I’m not spouting this from out of some kind of conservative maw. I value weighing issues on a case-by-case basis so as to measure inequality, and that perspective accurately describes a number of people on the same board.

I guess what I’m ultimately saying is that I hope you don’t feel somehow at risk of guilt by association for the fact that the work you’ve done here will inevitably be appreciated by those who have been attempting to have this conversation for awhile. If that is the case, I respectfully ask you to merely consider that any assumptions you might have about “men’s rights” advocates may be as skewed as the statistical analyses you’ve critiqued in these posts. Note that I cannot assume anything about your degree of ground-level literacy about the groups in question, and that I mean no offense whatsoever in my concern. I’m speaking principally in response to the tone of your comment above, and am trying to preempt something which may or may not be the case.

Thank you for this analysis. I found it illuminating. And thanks for reading.

I look forward to future topics.

My comment stems from both the benefit of analytics data as well as knowledge of all of the comments that were trashed for not meeting my commenting policy (which is pretty much “be nice”). Additionally, while I replied to a specific commenter, my caution was meant more as a general caution rather than directed at blighter in particular. In contrast to those whose comments did not get approved, I appreciate you making your points in the civil manner that you did and thanks for reading.

Agh. I wrote a long reply to this below, but I forgot to click “reply” first. Apologies!

Welcome to the wonderful world of blog comments, where people you don’t know impute motives you don’t have, and murder a thousand words to do so as if this was their soapbox rather than yours. This isn’t about advocating for any side, but advocating for accuracy. You’ve done so exceptionally well, and it’s very much appreciated.

Oh yes, I am learning that very quickly. Glad you enjoyed.

The topic was a good and one which has a surfeit of simply terrible statistics floating around but your analysis is more what I was applauding. It was well researched and well written. I’ll enjoy seeing more on whatever topic. Hopefully even when my own ox is being gored — though that’s unlikely b/c all of my beliefs are empirically sound and deeply grounded in serious research, lol.

I’m immensely hopeful for continued excellence, though I expect you’ll have a hard time pumping content out fast enough to maintain readership given the time and thought you clearly put in to these two posts. Either way, thanks for this and good luck in the future.

Thanks for the reply. The fact that you received a number of trash-worthy comments is unfortunate, and it goes a long way toward explaining the purpose of your comment above. I wasn’t aware that that this was going on, but I should have assumed, because it often does.

In this climate, it is often difficult to tell what is legitimately representative of a ‘movement’ (everything seems so amorphous and overlapping these days), what is representative of a fringe element within said movement, and what is merely the output of a shill/sock-puppeteer working to discredit said movement by enlarging and parodying its worst features while feigning civility or authenticity.

The benefit of analytics data will at least help you pin down the source of the worst commenters, but then it’s not uncommon for people to use highly contentious boards opposing their personal views, like /r/mensrights or /r/feminism, to identify sources to launch discrediting campaigns on. So it’s a mess.

That said, it is true that there is a subset of self-described MRAs evincing a conservative/traditionalist bent who seek a return to outdated social values as opposed to a broader dismantling of stifling gender expectations, but I think that they’re in the minority. There are also those who fail to make the distinction between pro-women’s rights and feminism, or even the different feminisms which manifest in different contexts (e.g., the averaged understanding of feminism in youth-centric communities like Tumblr vs. the equity feminism of someone like Christina Hoff Summers vs. the dictionary definition of feminism vs. contemporary academic feminists; it’s possible for each of these groups to be wrong in some of the same ways, but not all of the same ways – a point often overlooked). But this sort of misapprehension happens on both sides of the men’s/women’s-rights discourse, of course, and is no more or less unfortunate whenever it does. My hope is that we can avoid it more and more over time, but it’s a faint hope.

And, of course, there’s simply the “internet factor” to consider, whereby someone will understand what you’re saying in a narrow sense before going on to assume far too much about your intent. They then overemphasize their degree of affiliation with you beyond any reasonable measure of confidence by implying shared perspectives you have not explicitly endorsed, which is probably happening here. It’s lame, and I sympathize. It’s a small consolation, but know that in at least some of these cases, this stems from genuine, sincere relief at having found a comprehensive analysis in support of a view they hold dear, but have been made to feel marginalized by. This view might be part of a constellation of views they also hold under the umbrella of a broader description, hence the undue association.

To someone who, like me, is deeply concerned about systemic inequality in all its forms but is nonetheless also convinced that men have an especially challenging uphill climb in regards to the public’s perceptions of issues they disproportionately face due to the fundamentals of social psychology (among other factors), work of the sort you’ve done here – articulate, clear, rational, thorough – is invaluable in that specific sense. But I’d also deeply value those qualities when applied to any other topic open to intellectual consideration, so I can safely say that if you keep up the standards you’ve demonstrated here, you’ll definitely find a deserving audience who explicitly and apolitically recognizes the merit of your methods, and the rigor of your analysis, over time. Thanks again.

Francis,

Thanks for the valuable contribution to this subject. I’d long wanted to post about this, so now I can just link.

It doesn’t look like you have a reference to the 2006 Rumney paper, which is a small meta study. Here is the link:

http://eprints.uwe.ac.uk/6478/1/Download.pdf

This paper is really useful, because it references other papers that compute false allegation rates, and all of them have both the number of false allegations, as well as the total number of allegations, so it is possible to compute a weighted average.

With a sample size of 8620, it’s a reasonable N for analysis. I get a weighted average of 11.4% +/- 2.4%

Here is a link to a Google spreadsheet with the calculations:

[Ed note: link removed. Sorry, I’m always a bit leery of links to google docs from people I don’t know]

eric.

Eric,

I think one of the problems with doing a weighted average of the existing studies is that we get to a number that isn’t particularly useful, particularly when there are are flaws in the underlying research. I think my borader point here is that due to the complicated nature of proving that a rape did or did not happen (often there is only he said/she said), I think there are very few times when we can conclusively determine that the report was true or false.

It’s fantastic that you’re doing this kind of analysis, and I look forward to whatever subject you decide to tackle next.

One of my pet concerns is that there can be subtle differences between what a study shows and what questions a policy analyst needs to answer, and this can lead to misunderstandings and confusion. For example, the study methodology may be strong for what it shows, but the slightly different question raised by the analysts may cross a line that leads to poorly supported conclusions. It also doesn’t help when two different policy analysis questions are confused when interpreting the same data.

Along those lines, I have a few comments. In discussing the 1977 Clark and Lewis study’s decision to exclude false reports made be people other than the putative victim, you say, “It would seem that rape reports made by someone other than the victim do not count as false despite being, well, false.” I think you’re clearly correct if the question is whether the report is false, but in popular discussion the question is often phrased as “Should we believe the woman?” In that case, it makes sense to omit false reports by other people. (Of course, true reports by other people should also be omitted. It probably doesn’t makes sense to commingle two such different sources of accusations when the question is honesty.) In other words, not all unfounded rape reports are the result of the victim lying. These are two separate questions.

There’s a similar concern with the MAD study, in that the baseless and informational results do indicate that no rape occurred, but they don’t necessarily mean that the victim was lying. She could have been telling the complete truth about an unpleasant event that nevertheless did not meet the legal criteria for rape. A further complication arises if the actual question under discussion is whether the rape laws or police investigation procedures are effective or need to be changed. In that case, the baseless and informational categories may indicate situations where the victim is again telling the truth and may have suffered actual harm, but the law is unable to address the problem. This is yet another question, and a much more complicated one that includes a lot of value judgements, so the study may not be helpful.

Over the years I’ve seen more than a few contentious arguments which could have been avoided — or at least been made more productive — if the participants had understood that they were addressing different questions.

Windy,

Those are all very good points. In an earlier draft of my post I included a comment about how I’m more critical of the people who use the paper to make claims that the study wasn’t set up to make, rather than the authors of the article. My rationale for this was that given the target audience of the paper (prosecutors), in my first reading of their article I read it more as an attempt to advocate the position to always fully investigate rape complaints even if parts seem unlikely or turn out to be incorrect. After writing everything out though, I decide to take it out because there were too may misleading omissions and questionable prosecutorial recommendations for me to continue to believe that the truth was their real goal. Don’t get me wrong, I’m still more upset at the way the study is used by others than by what the authors did, but I don’t feel they are completely free of blame either. In the end, I attribute what they did more to being blinded by their own advocacy rather than some sort of malice.

To your point about the Clark and Lewis study, the question the authors answered was literally stated as “How Many Sexual Assault Reports are False?” and their stated definition of a false report was “A false report is a report of a sexual assault that did not happen.” That is why I made my arguments the way I did. You are correct, if you change the question being ask, the analysis of underlying studies must change as well.

I initially got interested in the subject in the context of lowering the burden of proof for college hearings on the issue, so I think the most relevant question for me at the moment is more about the proper degree of due process for the accused. In that context, I think false reports made by someone other than the victim still need to be counted. However, to answer that type of question the more relevant adjustment that would need to be made would be to exclude rape reports made against an unknown assailant and only look at those where a specific person was identified.

For the MAD study when looking at baseless vs false, I turn to the example that the organization has used for baseless – someone who reports a rape due to feeling obligated to have sex to prevent a breakup. In that particular example, I don’t find the fact that there was no lie in the report particularly compelling. Though, if you want to make the argument that it isn’t strictly a false report, then the proper adjustment to make is to exclude those types of situations from the totals all together. This would, of course lead to the false report % rising due to a smaller total number eligible reports.

Great post, but I think there may bean even bigger flaw in the stats that I don’t see you having noticed (im reading on an iPhone so pardon if I missed it). Lisak et al. don’t account for what used to be called “non-crime” and for that reason don’t properly interpret the police reports.

Non-crime means the accuser described facts that, even if true, would not be a crime. An example is a woman in the 1970s accusing her husband of rape. Even if what she said were true, it just wasn’t illegal.

That’s still a big issue in rape accusations today, because the legal definitions of “consent” and “rape” are very, very complex. Many people believe rape is “sex without consent” and consent means “wanting it to happen” or saying yes. In reality consent under the law is closer to meaning that the person was capable of consent and didn’t object. (Not precisely but roughly.) So it’s entirely possible for an accuser to tell the truth and genuinely believe that she was raped but actually to not have been. I suspect, reading recent public accusations, that this is very common.

It’s a particular issue with interpreting police reports and prosecutor statements, which typically will say there isn’t sufficient evidence. That sounds like they’re not sure they believe the accuser or can’t prove her story. Actually though, in rape cases, it’s more likely to mean the accused alleged facts that if true wouldn’t constitute a rape. Non-crime.

This is the biggest difference between lisak eta and preceding studies. Lisak et al only ask if the accuser is lying – not whether she’s alleging non-crime.

This post didn’t really deal with Lisak’s study. I discussed the Lisak study in a later post here: http://www.datagoneodd.com/blog/2015/06/28/what-do-we-know-about-false-rape-allegations/

this dealt with a study in the 2000s – marital rape is no longer a legal thing. i suppose that if you were to say that you were scared and didn’t object because of that, it’d fall into the section about missing elements of a crime, but that is a limited part of the analysis, and then you have to get into the rather hairy question of where that line is and should be. it’s rather difficult to make a determination with the data as presented.

3rd paragraph, for the author really: does the unsubstantiated category refer to cases where the accuser can’t prove her story, or simply that the claims don’t describe a crime? the example given would tend to suggest the latter.

Excellent article and study of a complex issue. As a retired law enforcement officer from a large city police force I would like to adds some points to the discussion. First is that false rape reports are problematic in that they not only are detrimental to the falsely accused, but erode trust in the validity of legitimate rape reports. My individual professional anecdotal experience is that false report is significantly higher than 8% and that most false reports are by late teen early twenty complainants. One procedural difference between different police agency may greatly effect false report rates. Most false reports in my department are determined upon initial investigation, with the victim’s story falling apart fairly quickly. All rape cases in my agency are investigated by a stand alone sex crimes unit that immediately involved in initial investigations. My agency will not charge that “victim” with false report and will not record the rape allegation in any statistical record. The reasoning is that if a complaint number for a rape has not been generated, it does not have to be cleared as a false report. Some in law enforcement would argue that it is not a false rape report until supporting depositions or complaints are falsely attested to by the victim. I also agree that a false claim of a weapon used during the rape should not necessarily discount the victim’s rape claim. I have had numerous male victims of larceny claim they robbed at weapon point when they were merely scammed or strong arm robbery victims. Rape is a horrific crime that is very traumatic for the victim. Minimizing the existence of false reporting does nothing to serve justice to actually victims.

Joe, a recent (botched) report on SAKs from Detroit says that police there also recognize young women as much more likely to make false allegations (though the “typical” example cited is a girl coming home past curfew). See p. 113 in https://www.ncjrs.gov/pdffiles1/nij/grants/248680.pdf

Sorry for the many grammatical errors, I failed to proofread before posting.

I think the main difference in dynamics is not geographical but size of the city. The socail dynamics of a small-town community (the one considered in Kanin’94) – that is the need to “save face” if a young girl gets pregnant or avoid premarital sex or even access to sex educations and where police depts are probably not overloaded with other police work – is WILDLY different from big cities. See the video in http://www.localnews8.com/news/local-sheriff-reacts-to-rape-kit-legislation/38515374 from a Sheriff of a small Idaho community (comparable to Kanin’94) saying EXACTLY the same thing as Kanin – both quantitatively and qualitatively – and getting TONS of heat for it.

Here’s a doubt I have:

When you say, only 1-8% is true and only 2-8% is false, my doubt is, can that be extrapolated here?

One good hypothetical example is let’s say it’s found out 20% of all pick pocketing is reported on a public transport and 30% on other locations and 50% in unverifiable locations. Can we extrapolate that 2 quarters of pickpocketings happens at public transport so resources can be proportionally or adequately employed on prevention or investigation (like cameras or signs warning people to check their belongings)?

Or is it too much of a stretch? Can the part of data where a detail is missing be extrapolated by data where a detail isn’t missing? And if it can, when it can, in which circumstances those extrapolations can be made?

For one example, I wouldn’t think when this section of the data with missing information is too small that extrapolations can be made, as it this data could be missing due to the specific nature of what made that information missing.

One good example for this is race (which is a loosely defined group of phenotypes) of an attacker in case of murder. It could be that something in mostly black inner cities, or mostly white inner cities, or suburbs, makes murdering someone without leaving a witness and other things that would help identify an attacker more easily, so this set with this data missing could be in reality proportionally consistent with the other data or highly skewed one way or the other.

But where the portion of the data missing is sufficiently high I think there’s a bigger chance it would be consistent in reality with other data as it’s most likely due to the nature of the crime itself. (well technically this works for other stuff as well)

We do gather data to extrapolate it over way more cases where it hasn’t happened yet, so we can be better prepared, does it mean we can extrapolate it for cases that have happened but we don’t have that specific detail?

Of course there’s also looking at other known data of the incidents to see if it matches with other known data of the incident and how it correlates and what can be extrapolated from it. For one example, one known car crash without the data of why it happened has all other signs consistent with texting and driving, it’s just that both drives drove off and couldn’t be located for inquiry. We might be justified in assuming it’s another case of texting and driving and add it to the statistic of accidents caused by texting and driving.

The point being made was that if you want to say these studies find that only 2-8% are false, then use can use the same flawed logic to say only 1-8% are true. Neither would be a particularly defensible or honest interpretation of the data though.